Tenant-to-Tenant – PST based Exchange Migration automation approach – Part III

This is part III of the blog series which describes how you can use PST Import and Export Methods for an Exchange Online Migration. In the last both parts ( Part I, Part II ) I described how to export Mailbox settings and content. Today I will describe how you can use PowerShell and azcopy to upload the exported PST’s to an Azure Blob Container with the intention of importing them into the Exchange Online destination mailboxes later.

Azure Blob Container

First, we need to create a Storage Account to use its container as the target for our PST Upload Script. You can decide freely if you want to use the Azure Tenant from the source or from the destination. I’ve chosen the destination tenant. Go to the Azure Portal and create a new Storage account.

Basics: Choose a Storage Plan of your choice. I’ve chosen the cheapest plan cause I’m able to recreate the PSTs if something unexpected happens.

Advanced: I’ve disabled public access and changed the Access tier to ‚Cool‘.

Networking: Choose the Network access scenarios which fit your requirements.

Data protection: In the Data Protection tab I’ve disabled all recovery options.

I’ve skipped the next settings cause they are not relevant.

Check the summary and click on create

Now navigate to the new resource and create a new container

To authenticate with azcopy, we use a Shared access token. You can create one in the new container. The token needs the permissions to read, add, create and write. The expiration time should fit your migration plans.

You will now receive the ‚Blob SAS token‘ and the ‚Blob SAS URL‘. Please note them somewhere. We will use them in the upload script.

Now download the command line tool AZCopy (Copy or move data to Azure Storage by using AzCopy v10 | Microsoft Learn) to the machine you want to use to upload the PST Files you want to import. Store the azcopy.exe and don’t forget to unblock the file:

Folder structure

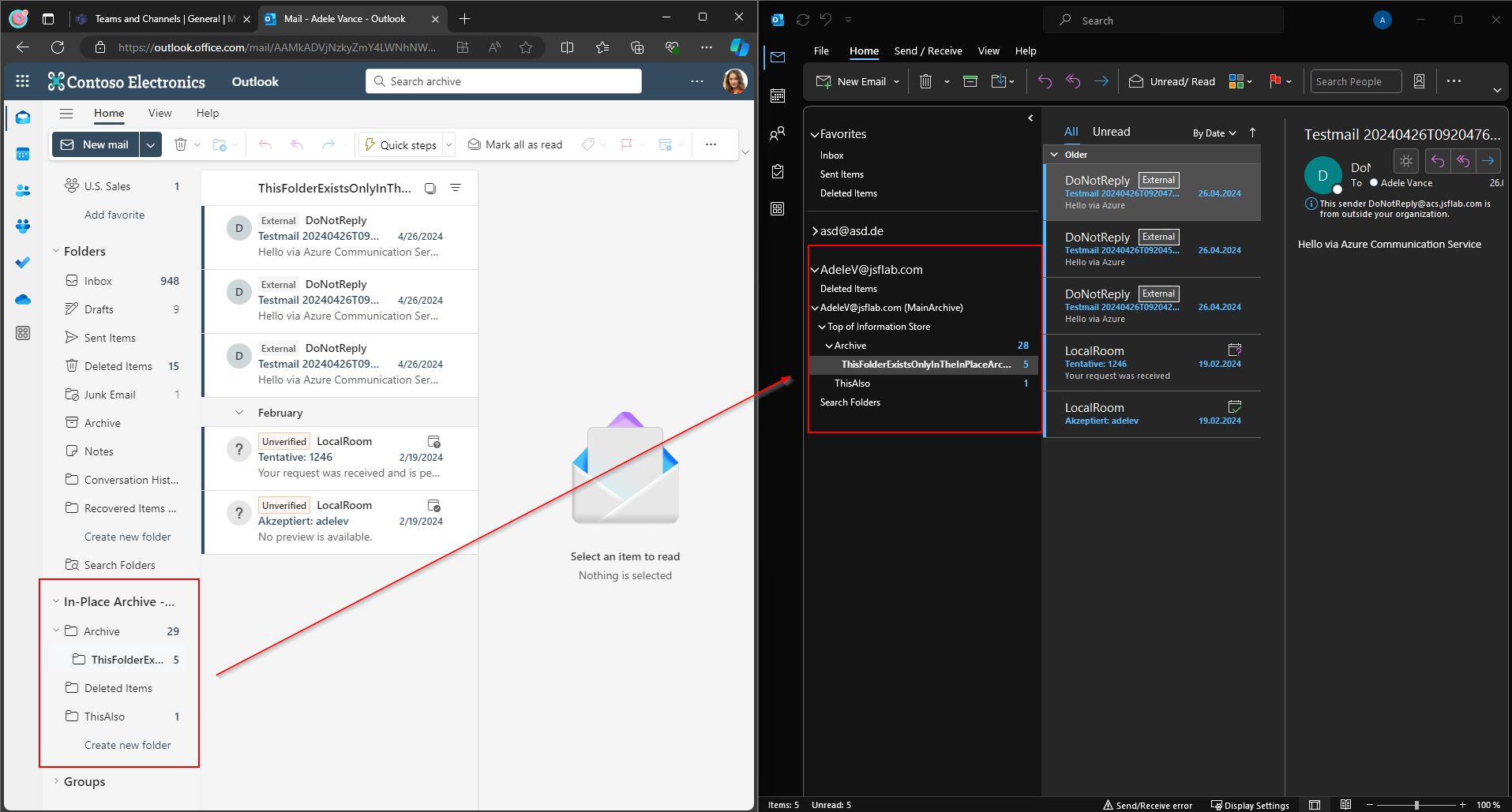

We use folders and filenames to map the PSTs to the destination mailboxes. In an upcoming article, where I will explain the PST Import, I assume that you have a folder per User that is named like the destination UPN which contains the PSTs of the related source user. The PST has the name of the UPN of the Source User.

You need to prepare this structure. You could e.g. use powertoys rename to rename the folders generated by the pst export. I used the following PowerShell commands to get it done:

$targetUPNDomain="@jsflab.com"

$pstRootPath="C:\git\thinBlog\pst ex import\Export\ExportedPSTs"

$folders=get-childitem -Path $pstRootPath -Directory

foreach ($folder in $folders){

Rename-Item -Path $($folder.FullName) -NewName ($($folder.Name).Split('@')

[0]+$targetUPNDomain)

}

PST upload

Now that we have the target container and azcopy prepared and the pst’s in one place, we proceed to upload these PST files. To get this done we combine the power of PowerShell with azcopy. The PS Script collects all folders within a named directory and starts an upload for every folder which copies the folder and its content (the PSTs) to the azure storage.

You can download the script here from github: thinBlog/uploadTo-azure.ps1 at main · thinformatics/thinBlog · GitHub

You need to adjust the variables: >

#Load Global "Settings"

$PSTExportFolder="C:\git\thinBlog\pst ex import\Export\ExportedPSTs" # Root Folder which contains a folder for every user with the related pst

$azcopypath="C:\git\thinBlog\pst ex import\azcopy\" # the path to where you have stored azcopy.exe

$containerURL="https://scalablepstimport.blob.core.windows.net/pstimport" #The blob container url. You have noted it before.

$sastoken="?sp=racw&st=2022-11-25T09:23:18Z..." # The blob SAS token. You have noted it before. Put a question mark in front of the string

Then you can start the script and lean back until all files were uploaded to azure. You can prove the results by checking the azcopy logs or by navigating to the container via azure portal and check the content via the storage browser.

Where are we now?

I’ve demonstrated a way how you can upload masses of pst’s in a storage repository which we can address later for PST Imports into Exchange Online Mailboxes.

You could use this approach to upload all PST’s at once, but you can also repeat the script every time if something has changed in the source (e.g. you need a more actual pst export of a specific user mailbox).

In the next part of this series, I will show you how you can import all the uploaded PST Files to the related users‘ mailboxes.